Lebesgue differentiation theorem

In mathematics, the Lebesgue differentiation theorem is a theorem of real analysis, which states that for almost every point, the value of an integrable function is the limit of infinitesimal averages taken about the point. The theorem is named for Henri Lebesgue.

Contents |

Statement

For a Lebesgue integrable real or complex-valued function f on Rn, the indefinite integral is a set function which maps a measurable set A to the Lebesgue integral of  , where

, where  denotes the characteristic function of the set A. It is usually written

denotes the characteristic function of the set A. It is usually written

with λ the n–dimensional Lebesgue measure.

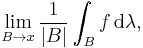

The derivative of this integral at x is defined to be

where |B| denotes the volume (i.e., the Lebesgue measure) of a ball B centered at x, and B → x means that the diameter of B tends to zero.

The Lebesgue differentiation theorem (Lebesgue 1910) states that this derivative exists and is equal to f(x) at almost every point x ∈ Rn. The points x for which this equality holds are called Lebesgue points. Since functions which are equal almost everywhere have the same integral over any set, this result is the best possible in the sense of recovering the function from integrals.

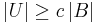

A more general version also holds. One may replace the balls B by a family  of sets U of bounded eccentricity. This means that there exists some fixed c > 0 such that each set U from the family is contained in a ball B with

of sets U of bounded eccentricity. This means that there exists some fixed c > 0 such that each set U from the family is contained in a ball B with  . It is also assumed that every point x ∈ Rn is contained in arbitrarily small sets from

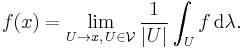

. It is also assumed that every point x ∈ Rn is contained in arbitrarily small sets from  . When these sets shrink to x, the same result holds: for almost every point x,

. When these sets shrink to x, the same result holds: for almost every point x,

The family of cubes is an example of such a family  , as is the family

, as is the family  (m) of rectangles in R2 such that the ratio of sides stays between m−1 and m, for some fixed m ≥ 1. If an arbitrary norm is given on Rn, the family of balls for the metric associated to the norm is another example.

(m) of rectangles in R2 such that the ratio of sides stays between m−1 and m, for some fixed m ≥ 1. If an arbitrary norm is given on Rn, the family of balls for the metric associated to the norm is another example.

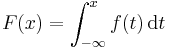

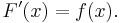

The one-dimensional case was proved earlier by Lebesgue (1904). If f is integrable on the real line, the function

is almost everywhere differentiable, with

Proof

The theorem can be proved as a consequence of the weak–L1 estimates for the Hardy–Littlewood maximal function. The proof below follows Stein & Shakarchi (2005) which is the same as in Wheeden & Zygmund (1977).

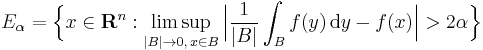

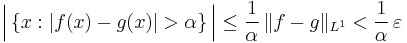

It is sufficient to prove that the set

has measure 0 for all α > 0.

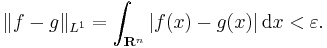

Let ε > 0 be given. Using the density of continuous functions of compact support in L1(Rn), one can find such a function g satisfying

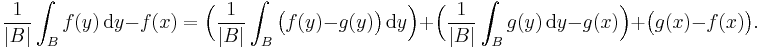

It is then helpful to rewrite the main difference as

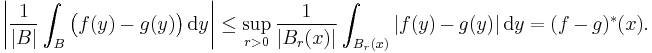

The first term can be bounded by the value at x of the maximal function for f − g, denoted here by  :

:

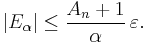

The second term disappears in the limit since g is a continuous function, and the third term is bounded by |f(x) − g(x)|. For the original difference to be greater than 2α in the limit, at least one of the first or third terms must be greater than α. However, the estimate on the Hardy–Littlewood function says that

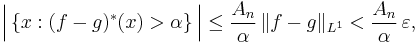

for some constant An depending only upon the dimension n. The Markov inequality (also called Tchebyshev's inequality) says that

whence

Since ε was arbitrary, it can be taken to be arbitrarily small, and the theorem follows.

Discussion of proof

The Vitali covering lemma is vital to the proof of this theorem; its role lies in proving the estimate for the Hardy-Littlewood maximal function.

The theorem also holds if balls are replaced, in the definition of the derivative, by families of sets with diameter tending to zero satisfying the Lebesgue's regularity condition, defined above as family of sets with bounded eccentricity. This follows since the same substitution can be made in the statement of the Vitali covering lemma.

Discussion

This is an analogue, and a generalization, of the fundamental theorem of calculus, which equates a Riemann integrable function and the derivative of its (indefinite) integral. It is also possible to show a converse - that every differentiable function is equal to the integral of its derivative, but this requires a Henstock-Kurzweil integral in order to be able to integrate an arbitrary derivative.

A special case of the Lebesgue differentiation theorem is the Lebesgue density theorem, which is equivalent to the differentiation theorem for characteristic functions of measurable sets. The density theorem is usually proved using a simpler method (e.g. see Measure and Category).

See also

References

- Lebesgue, Henri (1904). Leçons sur l'Intégration et la recherche des fonctions primitives. Paris: Gauthier-Villars.

- Lebesgue, Henri (1910). "Sur l'intégration des fonctions discontinues". Annales scientifiques de l'École Normale Supérieure 27: 361–450. http://www.numdam.org/item?id=ASENS_1910_3_27__361_0.

- Measure and Integral - An introduction to Real Analysis, Richard L. Wheeden & Antoni Zygmund, Dekker, 1977

- Measure and Category, John C. Oxtoby, Springer-Verlag, 1980

- Stein, Elias M.; Shakarchi, Rami (2005). Real analysis. Princeton Lectures in Analysis, III. Princeton University Press. pp. xx+402. ISBN 0-691-11386-6 MR2129625